In today’s digital landscape, ensuring that your website is discoverable by search engines and human users is essential for success. While keywords and content are crucial for SEO, another critical aspect often goes unnoticed: crawlability.

With billions of web pages and websites, search engine bots play a vital role in exploring and understanding the vast amount of information available. These bots, also known as spiders, crawl through websites, following links and compiling data to create an index of URLs. This index forms the foundation for search engine algorithms to rank and display relevant results.

Crawlability refers to how effectively search engine bots can scan and index your web pages. Suppose your site has poor crawlability, with broken links and dead ends. In that case, search engine crawlers won’t be able to access and index all your valuable content, resulting in lower visibility in search results.

Indexability is equally crucial, as pages that are not indexed will not appear in search results. If search engines haven’t included a page in their database, it cannot be ranked or displayed to users.

By understanding and addressing crawlability issues, you can ensure that your website is accessible to search engine bots and human users alike. In this guide, we will explore common crawlability problems, their impact on SEO, and, most importantly, how to fix them. Let’s get started!

Know: What Is A Crawl Error?

Contents

ToggleThe Impact of Crawlability Issues on SEO

Crawlability issues can have a significant impact on your website’s SEO performance. When search engines encounter crawlability problems, it can hinder their ability to effectively discover and index your web pages. Here’s how crawlability issues affect SEO:

- Incomplete Indexing: If search engines cannot crawl and index your web pages due to crawlability issues, those pages may not appear in search results. This results in missed opportunities for organic visibility, reducing the chances of attracting organic traffic and potential conversions.

- Reduced Organic Traffic: When your pages are not indexed, they are invisible to search engine users. Your website may receive less organic traffic than it deserves, as it won’t appear in relevant search queries. Crawlability issues can restrict the flow of organic traffic to your website.

- Decreased Visibility and Rankings: Search engines rely on crawling and indexing to understand the content and relevance of your web pages. If crawlability issues prevent search engines from accessing and analyzing your content, it can negatively impact your rankings. Without proper crawlability, your pages may not rank as high in search results, resulting in decreased visibility and fewer opportunities to attract organic traffic.

- Missed Conversion Opportunities: Crawlability issues can hinder users from finding your website and its relevant pages. If potential customers cannot discover your site through search engines, it can lead to missed conversion opportunities. Improving crawlability ensures your web pages are accessible to search engine users, increasing the chances of driving relevant traffic and conversions.

Know: Your 6-Point SEO Checklist For 2023

Common Crawlability Issues and How to Fix Them

Crawl errors can be a hurdle for your website’s performance, but the good news is that they can be resolved. Let’s explore some of the most common crawl errors and how to address them effectively.

Crawlability Issue 1: 404 Errors

404 errors occur when a web page is not found on the server. While these errors don’t directly impact rankings, they can affect the overall user experience if not addressed.

Solution: To resolve 404 errors, redirect users from non-existent URLs to equivalent URLs. Review the list of 404 errors and set up redirects to corresponding pages on the live site. Alternatively, you can serve a 410 HTTP status code to inform search engines that the page has been permanently deleted. Consider the specific cause of the error to determine the best solution.

Know: Fix 404 Errors In WordPress

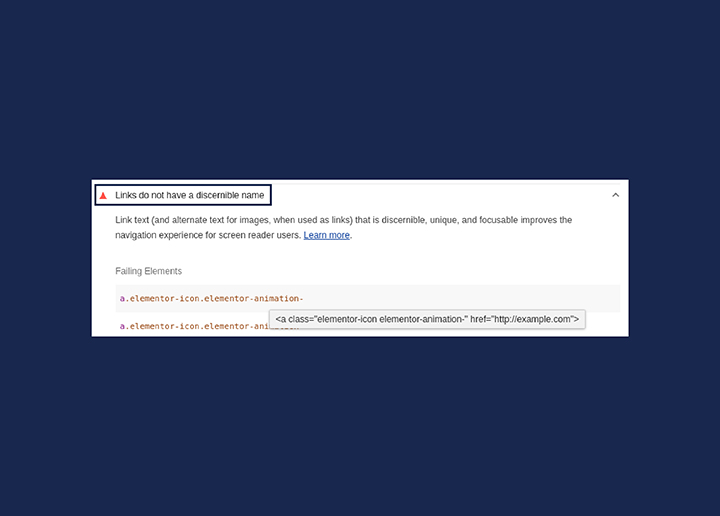

Crawlability Issue 2: Blocked Pages

If specific pages on your website are unintentionally blocked from crawling by search engine bots, it can hinder their ability to index those pages.

Solution: Check your robots.txt file and ensure it doesn’t block essential pages or sections of your website. Modify the file if necessary to allow proper crawling and indexing of your desired pages.

Crawlability Issue 3: Page Duplicates

Page duplicates occur when multiple URLs load the same content, making it harder for search engines to determine which page to prioritize. This can lead to indexing issues and waste the crawl budget on repetitive content.

Solution: Utilize the rel=canonical tag to communicate the original or “canonical” page to search engines. Implement the tag on all relevant pages to ensure search engines crawl and index the desired version.

Explore: How Search Engine Works – Crawling, Indexing, & Ranking?

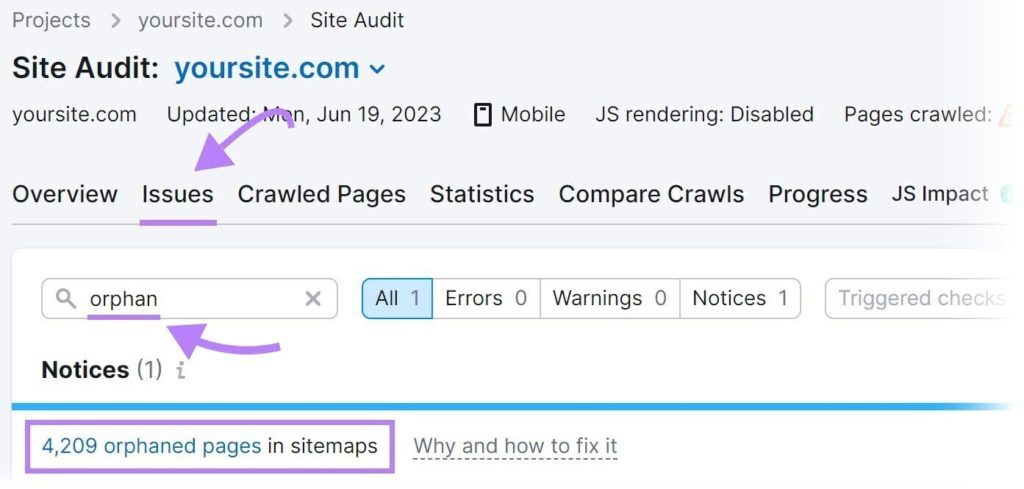

Crawlability Issue 4: Wrong Pages in the Sitemap

Incorrect URLs in your XML sitemap can mislead search engine bots and prevent them from indexing important pages. It’s essential to maintain an accurate and up-to-date sitemap.

Solution: Regularly review and update your XML sitemap, removing outdated or irrelevant URLs. Follow the sitemap size, encoding, and structure guidelines to ensure its effectiveness.

Crawlability Issue 5: Lack of Internal Links

Pages without internal links can hinder crawlability, making it difficult for search engines to discover and index those pages.

Solution: To address this, identify orphan pages (pages without internal links) and add relevant internal links from other pages on your website.

This helps search engines navigate and crawl these pages more effectively, improving their visibility and indexing. Updating your XML sitemap to include the newly linked pages also aids in their discovery.

Read: What Is Internal Link Building And How Does It Help Your SEO?

Crawlability Issue 6: Slow Load Speed

Slow-loading pages contribute to a poor user experience and impact crawlability. Faster page load speed allows search engine bots to crawl more efficiently and improves organic search visibility.

Solution: Optimize your website’s load speed by minifying CSS and JavaScript files, compressing image files, leveraging browser caching, and using content delivery networks (CDNs). Regularly measure your site’s load speed using tools like Google Lighthouse and implement recommended optimizations.

Know: Why Is Your WordPress Slow?

Crawlability Issue 7: Using HTTP Instead of HTTPS

With the importance of server security, migrating from HTTP to HTTPS has become crucial. Search engines prioritize HTTPS pages, and using HTTP can negatively impact crawlability and rankings.

Solution: Obtain an SSL certificate for your website and migrate to HTTPS. This ensures a secure and encrypted connection between users and your website, improving crawlability and search engine rankings.

Read: How To Get Free SSL For WordPress?

Crawlability Issue 8: Non-Mobile-Friendly Website

With mobile-first indexing, search engines prioritize the mobile version of your website. Failing to have a mobile-friendly website can hinder crawlability and impact rankings.

Solution: Adopt responsive design practices, ensure your website is mobile-friendly, and optimize pages for both mobile and desktop devices.

Learn: Best WordPress Speed Optimization Plugins

Wrap Up

Crawlability issues can have a significant impact on your website’s SEO performance. Common problems such as 404 errors, page duplicates, robots.txt failures, wrong pages in the sitemap, lack of internal links, slow load speed, and not using HTTPS can prevent search engines from effectively crawling and indexing your web pages.

However, the good news is that these crawlability issues can be fixed. By implementing the suggested solutions, such as redirecting 404 errors, implementing URL canonicalization, ensuring a proper robots.txt file, updating sitemaps, optimizing load speed, migrating to HTTPS, and adding internal links, you can improve crawlability, enhance search engine visibility, and ultimately boost your website’s SEO performance.

Remember that crawlability is crucial for search engines to understand and rank your web pages. Addressing and resolving crawlability issues creates a solid foundation for search engines to access and index your content, leading to better organic visibility and increased website traffic.

Fix All Your Crawlability Errors With Seahawk

Tired of dealing with crawlability issues? Let Seahawk optimize your website and ensure smooth indexing by search engines.