Il est important de mettre constamment à jour votre site pour qu’il reste impeccable, propre et à jour afin d’améliorer ses performances dans les moteurs de recherche. Cependant, l’examen de chaque page de votre site peut être une tâche compliquée à effectuer.

Ainsi, dans l’espace Web numériquement avancé, le rôle des robots d’indexation devient plus important que jamais. Un robot d’indexation est un robot qui parcourt numériquement votre site Web et aide à indexer les pages Web, à collecter des données et à permettre aux moteurs de recherche de fournir des résultats de qualité.

Comprendre les différents types de robots d’indexation et leurs fonctions peut fournir des informations précieuses pour optimiser votre site Web et garder une longueur d’avance dans le jeu du référencement . Ainsi, aujourd’hui, nous présentons une liste complète de robots d’exploration qui peut être utile pour les webmasters et les professionnels du référencement.

Contenu

ToggleComprendre les robots d’indexation

Les robots d’indexation, également connus sous le nom de robots d’indexation, sont des programmes automatisés qui se déplacent d’avant en arrière sur le Web, organisant le contenu à des fins d’optimisation des moteurs de recherche, de collecte de données et de surveillance.

Ils sont essentiels pour indexer les pages Web avec des moteurs de recherche tels que Google, Bing et d’autres utilisant un jeton d’agent utilisateur pour s’identifier lors de l’accès à des sites Web.

Comprendre le fonctionnement de divers robots d’indexation Web à l’aide d’une liste complète peut aider à optimiser les pages de destination pour les moteurs de recherche.

Lire la suite : Qu’est-ce qu’un robot d’indexation ?

Comment fonctionnent les robots d’indexation

Les robots d’indexation analysent systématiquement les pages Web et indexent les données pour les stocker dans l’index d’un moteur de recherche afin d’être récupérées en réponse aux requêtes de recherche des utilisateurs. Il suit les liens d’une page à l’autre et adhère au protocole robots.txt, ce qui aide les robots d’exploration des moteurs de recherche à savoir à quelles URL ils peuvent accéder sur votre site.

Les développeurs ou les spécialistes du marketing peuvent spécifier dans leur robots.txt sur leur site s’ils approuvent ou refusent certains robots d’exploration en fonction de leur jeton d’agent utilisateur.

Comprendre le processus d’exploration peut rendre votre site Web plus accessible et visible pour les moteurs de recherche comme Google et Bing. Ces connaissances peuvent finalement améliorer le classement et la visibilité de votre site Web dans les résultats de recherche.

Types de robots d’indexation

Il existe trois principaux types de robots d’indexation :

- Les robots d’indexation généraux, également connus sous le nom de robots d’indexation ou d’indexation, parcourent systématiquement les pages Web pour collecter des données pour l’indexation des moteurs de recherche. Les moteurs de recherche utilisent ces informations pour classer et présenter les résultats de recherche.

- Les robots d’exploration ciblés ciblent des types de contenu ou de sites Web spécifiques. Ils sont conçus pour recueillir des informations sur un sujet ou un domaine particulier.

- Les robots d’exploration incrémentiels analysent uniquement les pages Web qui ont été mises à jour depuis la dernière analyse. Cela leur permet de collecter efficacement du contenu nouveau ou modifié sans avoir à explorer à nouveau l’ensemble du site Web.

Les robots d’exploration ciblés, quant à eux, collectent les pages Web qui adhèrent à une propriété ou à un sujet spécifique, en hiérarchisant stratégiquement la frontière de l’exploration et en maintenant une collection de pages pertinentes.

Les robots d’exploration incrémentiels revisitent les URL et réexplorent les URL existantes pour maintenir les données analysées à jour, ce qui les rend idéales pour les scénarios nécessitant des données mises à jour et cohérentes.

En relation : Comment fonctionne le moteur de recherche - Exploration, indexation et classement ?

Top 14 des listes de robots d’indexation à connaître en 2023

Cette liste complète de robots d’indexation détaille les robots d’indexation les plus courants, en soulignant leur rôle dans l’indexation, la collecte et l’analyse des moteurs de recherche. Ces robots d’exploration sont les suivants :

- Googlebot (en anglais seulement)

- Bingbot (en anglais seulement)

- Yandex Bot

- Google Bard

- Openai ChatGPT

- Robot d’indexation Facebook

- Robot de Twitter

- Pinterestbot (en anglais seulement)

- AhrefsBot (en anglais seulement)

- SemrushBot (en anglais seulement)

- Robot d’exploration de la campagne de Moz Rogerbot

- Apache Nutch

- Grenouille hurlante

- HTTrack (en anglais seulement)

Nous explorerons chacun d’entre eux dans la liste des robots d’exploration, en nous concentrant sur leurs rôles et fonctionnalités uniques.

Googlebot (en anglais seulement)

Googlebot, également connu sous le nom d’agent utilisateur Googlebot, est le principal robot d’indexation de Google. Il est responsable de l’indexation et du rendu des pages pour le moteur de recherche. Il explore les sites Web de Google en suivant des liens, en analysant les pages Web et en respectant les règles de robots.txt, ce qui garantit que le contenu du site Web est accessible au moteur de recherche de Google.

Il est important de se familiariser avec Googlebot, car son processus d’exploration peut considérablement améliorer le classement et la visibilité de votre site Web dans les moteurs de recherche.

Bingbot (en anglais seulement)

Bingbot est le robot d’indexation de Microsoft pour le moteur de recherche Bing, avec une approche d’indexation axée sur le mobile. Il se concentre sur l’indexation de la version mobile des sites Web, en mettant l’accent sur le contenu adapté aux mobiles dans les résultats de recherche pour répondre à la nature centrée sur le mobile de la navigation moderne.

Il est similaire à Googlebot, et le principal moteur de recherche chinois est un robot d’exploration crucial pour ceux qui veulent que leur contenu soit découvrable sur plusieurs moteurs de recherche.

Yandex Bot

Yandex Bot est le robot d’indexation du moteur de recherche russe Yandex, donnant la priorité à l’écriture cyrillique et au contenu en langue russe. Il est responsable de l’exploration et de l’indexation des sites Web principalement en russe, répondant aux besoins spécifiques du public russophone.

Yandex Bot est un robot d’indexation crucial pour ceux qui ciblent le marché russe afin d’optimiser leur contenu.

Google Bard

Google Bard est un robot d’indexation pour les API génératives Bard et Vertex AI de Google, qui aide les éditeurs Web à gérer les améliorations du site. Il peut aider les éditeurs Web à gérer les améliorations du site en offrant des réponses plus précises, en s’intégrant aux applications et services Google et en permettant aux éditeurs de réguler les données d’entraînement de l’IA.

Il améliore la visibilité du contenu source et fournit de véritables citations dans les réponses, ce qui en fait un outil précieux pour les éditeurs Web qui cherchent à optimiser leur contenu.

Openai ChatGPT

Openai ChatGPT est un robot d’indexation utilisé par OpenAI pour l’entraînement et l’amélioration de ses modèles de langage. GPTBot collecte des données accessibles au public à partir de sites Web pour améliorer les modèles d’intelligence artificielle tels que GPT-4.

Le robot d’indexation d’Openai ChatGPT affine considérablement les capacités de l’IA, ce qui se traduit par une expérience utilisateur supérieure et des réponses plus précises du chatbot piloté par l’IA.

Robots d’indexation des médias sociaux

Les robots d’indexation des médias sociaux améliorent l’expérience et l’engagement des utilisateurs sur diverses plateformes. Ils indexent et affichent le contenu partagé sur des plateformes telles que Facebook, Twitter et Pinterest, offrant aux utilisateurs un aperçu visuellement attrayant et informatif du contenu Web.

Nous allons maintenant discuter de trois robots d’exploration notables des médias sociaux : Facebook Crawler, Twitterbot et Pinterestbot.

Robot d’indexation Facebook

Facebook Crawler rassemble les informations du site Web partagées sur la plate-forme et génère des aperçus riches, y compris un titre, une courte description et une image miniature. Cela permet aux utilisateurs d’avoir un aperçu rapide du contenu partagé avant de cliquer sur le lien, ce qui améliore l’expérience utilisateur et encourage l’engagement avec le contenu partagé.

Facebook Crawler optimise le contenu partagé pour la plateforme, offrant aux utilisateurs une expérience de navigation visuellement attrayante et informative.

Robot de Twitter

Twitterbot, le robot d’indexation de Twitter, indexe et affiche les URL partagées pour afficher des aperçus de contenu Web sur la plateforme. En générant des cartes d’aperçu avec des titres, des descriptions et des images, Twitterbot fournit aux utilisateurs un instantané du contenu partagé, encourageant ainsi l’engagement et l’interaction des utilisateurs.

Twitterbot optimise le contenu pour la plateforme Twitter, ce qui permet aux utilisateurs de découvrir et d’interagir plus facilement avec le contenu partagé.

Pinterestbot (en anglais seulement)

Il s’agit d’un robot d’indexation pour la plate-forme sociale axée sur le visuel, se concentrant sur l’indexation des images et du contenu à afficher sur la plate-forme. Pinterestbot explore et indexe les images, ce qui permet aux utilisateurs de découvrir et d’enregistrer des inspirations visuelles par le biais d’épingles et de tableaux.

Sa fonction principale est de fournir aux utilisateurs une expérience de navigation visuellement époustouflante et organisée, leur permettant d’explorer et d’interagir avec un contenu adapté à leurs intérêts.

Liste des robots d’indexation des outils SEO

Les robots d’indexation d’outils SEO sont essentiels à la collecte de données pour l’analyse et l’optimisation des performances des sites Web sur diverses plateformes de référencement. Ces robots d’indexation fournissent des informations précieuses sur la structure du site Web, les backlinks et l’engagement des utilisateurs, aidant les propriétaires de sites Web et les spécialistes du marketing à prendre des décisions éclairées pour améliorer leur présence en ligne.

Nous allons maintenant explorer trois robots d’indexation d’outils de référencement populaires : AhrefsBot, SemrushBot et Rogerbot, le robot d’indexation de campagne de Moz.

AhrefsBot (en anglais seulement)

AhrefsBot est un robot d’indexation qui indexe les liens pour le logiciel de référencement Ahrefs. Il visite 6 milliards de sites Web par jour, ce qui en fait le deuxième robot d’exploration le plus actif après Googlebot.

AhrefsBot explore les sites Web pour collecter des informations sur les backlinks, les mots-clés et d’autres facteurs de référencement. Il est utilisé pour éclairer les décisions d’optimisation.

AhrefsBot est un outil précieux pour ceux qui souhaitent améliorer le classement et la visibilité de leur site Web dans les moteurs de recherche. Comprend également les propriétaires de sites Web, les professionnels du référencement et les spécialistes du marketing.

SemrushBot (en anglais seulement)

SemrushBot est un robot d’indexation Web employé par Semrush, un fournisseur de logiciels de référencement de premier plan. Acquérir et cataloguer les données du site Web pour l’utilisation de ses clients sur sa plateforme. Il génère une liste d’URL de pages Web, les visite et stocke certains liens hypertexte pour de futures visites.

Les données de SemrushBot sont utilisées dans plusieurs outils Semrush, notamment :

- Moteur de recherche public de backlinks

- Outil d’audit de site

- Outil d’audit de backlink

- Outil de création de liens

- Assistante d’écriture

Ces outils fournissent des informations précieuses pour optimiser les performances du site Web et les stratégies de référencement.

Robot d’exploration de la campagne de Moz Rogerbot

Rogerbot est un robot d’indexation spécialement conçu pour les audits de sites Moz Pro Campaign. Il est fourni par le principal site de référencement, Moz. Il rassemble le contenu pour les audits de la campagne Moz Pro et suit robots.txt règles pour assurer la conformité avec les préférences des propriétaires de sites Web.

Rogerbot est un outil précieux pour les propriétaires de sites Web et les spécialistes du marketing qui souhaitent améliorer le classement et la visibilité de leur site Web dans les moteurs de recherche. Il utilise des audits de site complets et des stratégies d’optimisation basées sur les données.

En relation : Référencement optimal sur WordPress en 2024 : un guide complet

Robots d’exploration open source

Les robots d’exploration open source offrent flexibilité et évolutivité pour l’exploration de sites Web spécifiques ou l’exploration d’Internet à grande échelle. Ces chenilles peuvent être personnalisées pour répondre à des besoins spécifiques. Cela en fait une ressource précieuse pour les développeurs Web et les professionnels du référencement qui cherchent à optimiser les performances de leur site Web.

Nous allons maintenant nous plonger dans trois robots d’exploration open-source : Apache Nutch, Screaming Frog et HTTrack.

Apache Nutch

- Un robot d’indexation open source flexible et évolutif

- Utilisé pour explorer des sites Web spécifiques ou l’ensemble d’Internet

- basé sur les structures de données Apache Hadoop

- peuvent être configurés de manière détaillée.

Apache Nutch est idéal pour les développeurs Web et les professionnels du référencement qui ont besoin d’un robot d’indexation personnalisable pour répondre à leurs besoins spécifiques, qu’il s’agisse d’explorer un site Web particulier ou de mener des explorations Internet à grande échelle.

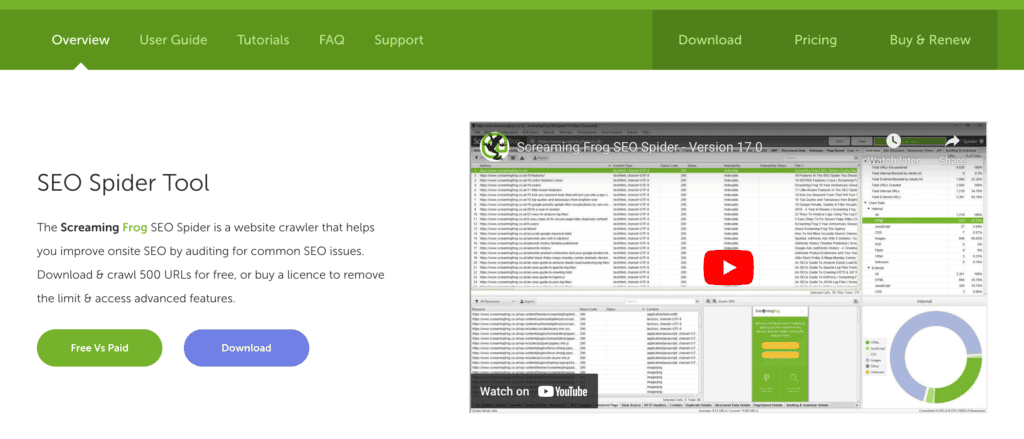

Grenouille hurlante

Screaming Frog est un outil de bureau permettant d’explorer des sites Web d’un point de vue SEO, en présentant des éléments sur site dans des onglets pour une analyse et un filtrage en temps réel. Il est réputé pour son interface conviviale et sa rapidité à produire des résultats techniques qui maximisent les explorations Google.

Screaming Frog est une ressource vitale pour les développeurs Web et les professionnels du référencement qui souhaitent améliorer les performances de leur site Web à l’aide d’audits de site complets et de stratégies d’optimisation basées sur les données.

HTTrack (en anglais seulement)

HTTrack est un logiciel gratuit qui permet de télécharger et de mettre en miroir des sites Web, avec un support pour plusieurs systèmes et de nombreuses fonctionnalités. Il fonctionne en utilisant un robot d’indexation pour récupérer les fichiers du site Web et les organiser dans une structure qui préserve la structure de liens relative du site d’origine.

Cela permet aux utilisateurs de naviguer sur le site Web téléchargé hors ligne à l’aide de n’importe quel navigateur Web. HTTrack est un outil précieux pour les propriétaires de sites Web et les spécialistes du marketing qui souhaitent créer une copie locale d’un site Web à des fins de navigation hors ligne ou de réplication.

Protéger votre site Web contre les robots d’indexation malveillants

Il est essentiel de protéger votre site Web contre les robots d’indexation malveillants pour prévenir la fraude, les attaques et le vol d’informations. L’identification et le blocage de ces robots d’exploration nuisibles peuvent protéger le contenu de votre site Web, les données des utilisateurs et la présence en ligne. Il rend l’expérience de navigation de vos visiteurs sûre et sécurisée.

Nous allons maintenant aborder les techniques d’identification des robots d’exploration malveillants et les méthodes pour bloquer leur accès à votre site Web.

Identification des robots d’exploration malveillants

L’identification des robots d’exploration malveillants implique la vérification des agents utilisateur, y compris la chaîne complète de l’agent utilisateur, la chaîne de l’agent utilisateur sur le bureau, la chaîne de l’agent utilisateur et les adresses IP dans les enregistrements du site.

Vous pouvez faire la différence entre les robots d’indexation légitimes et malveillants en analysant ces caractéristiques. Cela vous aide à prendre les mesures appropriées pour protéger votre site Web contre les menaces potentielles.

La surveillance régulière des journaux d’accès de votre site Web et la mise en œuvre de mesures de sécurité peuvent aider à maintenir un environnement en ligne sécurisé pour vos utilisateurs.

Techniques de blocage

Des techniques telles que l’ajustement des autorisations à l’aide de robots.txt et le déploiement de mesures de sécurité telles que des pare-feu d’applications Web (WAF) et des réseaux de diffusion de contenu (CDN) peuvent bloquer les robots d’exploration malveillants.

L’utilisation de la directive 'Disallow' suivie du nom de l’agent utilisateur du robot d’exploration que vous souhaitez bloquer dans votre fichier robots.txt est un moyen efficace de bloquer certains robots d’indexation.

En outre, la mise en œuvre d’un WAF peut fournir une protection de site Web contre les robots d’exploration malveillants en filtrant le trafic avant qu’il n’atteigne le site, tandis qu’un CDN peut protéger un site Web contre les robots d’exploration malveillants en acheminant les requêtes vers le serveur le plus proche de l’emplacement de l’utilisateur, réduisant ainsi le risque que des robots attaquent le site Web.

L’utilisation de ces techniques de blocage peut aider à protéger votre site Web contre les robots d’exploration nuisibles et à garantir une expérience de navigation sécurisée pour vos visiteurs.

Relatif : Meilleurs fournisseurs de services de sécurité WordPress de 2023

Vous cherchez à améliorer le référencement de votre site ?

Obtenez toutes vos réponses avec un audit SEO détaillé de votre site web, revenez sur les résultats de recherche

Résumé

En conclusion, les robots d’indexation jouent un rôle essentiel dans le paysage numérique, car ils sont responsables de l’indexation des pages Web, de la collecte de données et de la capacité des moteurs de recherche à fournir des résultats de qualité.

Comprendre les différents types de robots d’indexation et leurs fonctions peut fournir des informations précieuses pour optimiser votre site Web et garder une longueur d’avance dans le monde numérique. En mettant en œuvre des mesures de sécurité et des techniques de blocage appropriées, vous pouvez protéger votre site Web contre les robots d’exploration malveillants et maintenir un environnement en ligne sûr et sécurisé pour vos utilisateurs.

Questions fréquemment posées

Parmi les exemples de robots d’indexation, citons Googlebot (versions de bureau et mobile), Bingbot, DuckDuckBot, Yahoo Slurp, YandexBot, Baiduspider et ExaBot.

Un moteur de recherche d’indexation, également connu sous le nom d’araignée, de robot ou de bot, est un programme automatisé qui parcourt systématiquement les pages Web pour les indexer pour les moteurs de recherche.

Les robots d’indexation sont des programmes informatiques automatisés qui effectuent des recherches sur Internet, souvent appelés « robots ». Différents robots d’indexation se spécialisent dans le web scraping, l’indexation et le suivi de liens. Ils utilisent ces données pour compiler des pages Web pour les résultats de recherche.

Les robots d’indexation des médias sociaux permettent d’indexer et d’afficher du contenu sur plusieurs plateformes, améliorant ainsi l’expérience utilisateur et renforçant l’engagement.

Mettez en œuvre des pare-feu d’applications Web (WAF) et des réseaux de diffusion de contenu (CDN) pour protéger votre site Web contre les robots d’indexation malveillants.